Automated Gender Recognition Systems in Africa: Uncovering Bias and Discrimination

- Phionah Achieng Uhuru |

- May 29, 2023 |

- Artificial Intelligence,

- Digital Rights

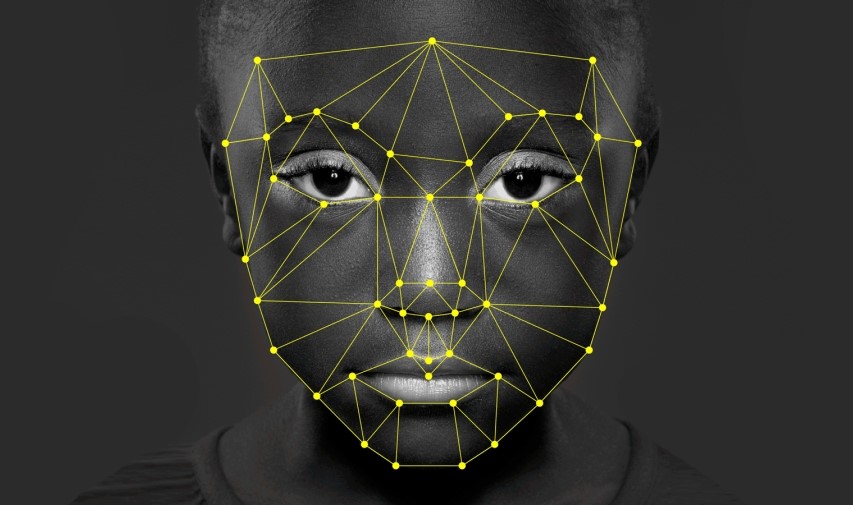

Gender data plays a vital role in decision-making processes across various online platforms. Gender-targeted advertising platforms, content moderation systems, and security departments heavily depend on gender-related data to fuel their operations. These platforms utilize algorithms to categorize users based on gender, race, socioeconomic status, and other factors. This article specifically aims to expose biases and discrimination within Automatic Gender Recognition Systems (AGRS) from an African perspective.

Introduction

Automated gender recognition systems (AGRS) are becoming more predominant in Africa. These technologies are developed by private entities as a part of the drive to build ‘smart cities’ across Africa. They rely on facial recognition features and other biometric data to classify and identify a person’s gender based on their physical appearance or voice. AGRS are increasingly being used in a range of contexts, including security, marketing, and healthcare. While the use of these technologies may seem like a useful tool for data collection and analysis, they have been shown to be highly biased and discriminatory, especially against darker-skin-toned individuals, women, and gender-nonconforming individuals. In this blog post, we will explore the biases and discriminatory effects of AGRS in Africa, and highlight some of the ways in which these systems can be improved to address these issues.

The Biases of AGRS

AGRS are based on machine learning algorithms that are trained on large datasets of images or audio recordings. However, these datasets are often biased, reflecting societal prejudices and stereotypes. For example, a dataset of faces used to train an AGRS may include predominantly male faces, or may not include enough diversity in terms of race, age, or other characteristics. As a result, these systems often make incorrect assumptions about a person’s gender based on physical features or voice characteristics that are associated with gender stereotypes. For example, an AGRS may misgender a woman with short hair or a deep voice, as these features as socially construed to be male features. Such biases lead to the over-policing of black individuals, women, and gender non-conforming individuals. They may be denied access to services, subjected to discrimination, or even put in danger if the system is used in security contexts.

The Discriminatory Effects of AGRS

The use of biometric technologies such as AGRS is becoming increasingly common in Africa, particularly in the context of security and surveillance. For example, in South Africa, the police have been using automated facial recognition technology to identify suspects. Similarly, in Kenya, automated facial recognition technology is also used to identify suspects and ensure efficient security management. However, these technologies have been shown to be highly biased and discriminatory, particularly against darker-skin-toned and gender-nonconforming individuals. For example, a study conducted in 2018, found facial recognition systems to be 34 % less accurate in identifying darker skin-toned women. This is likely due to biases in the dataset used to train the systems, which may have included more white faces. Moreover, the use of AGRS in security contexts can exacerbate existing biases and discrimination against marginalized groups. For example, women and gender non-conforming individuals may be targeted for surveillance or harassment based on their appearance or voice or may be denied access to services based on incorrect gender assumptions made by the system.

Improving AGRS

To mitigate the inherent biases and discriminatory effects of AGRS in Africa, it is important to improve the datasets used to train these systems. These systems should employ diverse and representative data sets that consider different gender identities and other characteristics, such as race, age, and disability, to ensure that the system is not biased against any particular group. African countries need to ensure that the biometric technologies that are introduced in the market rely on context specific algorithms before they are deployed. This will ensure that the dataset relied on is an accurate reflection of the local demographics. It is also important to involve diverse stakeholders in the development and deployment of these systems, including women, gender non-conforming individuals, and representatives from marginalized communities. This can help to ensure that the system is designed and implemented in a way that is sensitive to the needs and concerns of these groups.

In addition, transparent and accountable governance mechanisms need to be established. This involves creating a clear legal framework and ethical guidelines that govern the development, deployment, and use of automated gender recognition systems. These regulations should ensure that AGRS are subject to regular audits, oversight, and independent evaluations to identify and rectify any biases or discriminatory effects. Mechanisms should be in place to hold organizations and individuals accountable for any misuse or harm caused by these systems. Establishing robust governance structures makes it possible to address biases and discrimination in AGRS, while also safeguarding the rights and privacy of individuals. By implementing these measures, we strive to create more equitable and inclusive AGRS that do not perpetuate biases or discrimination against marginalized communities, both in Africa and beyond.

Conclusion

It is important to note that the recommendations given are not panacea tools for addressing bias or discrimination in the biometric technologies. Rather, they should be used as part of a broader set of strategies to address these issues. Further, it is also important to note that a number of legislations have been passed across several countries in Africa to try and mitigate unintended consequences of emerging technologies such as AGRS. However, the exponential advancement of these technologies constantly threatens to outpace the law and lawmakers. Addressing the unintended consequences of these technologies therefore requires proactive and collaborative efforts from all the stakeholders involved in designing, developing and deploying any biometric technology.